EAN: An Efficient Attention Module Guided by Normalization for Deep Neural Networks

Photo by rawpixel on Unsplash

Photo by rawpixel on Unsplash

AAAI 2024 Accepted, 2nd Author, Supervised by Prof. Jiafeng Li, East China Normal University

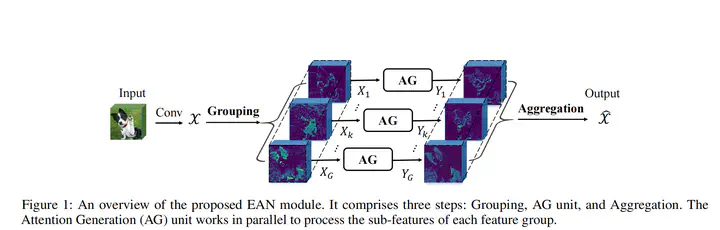

- Innovatively propose an Efficient Attention module guided by Normalization (EAN), a plug-and-play module investigating the intrinsic relationship between feature normalization and attention mechanisms to enhance model efficiency and accuracy across various visual tasks.

- Integrated EAN with an Attention Generation (AG) unit to derive attention weights using parameter-efficient normalization and guide the network to capture relevant semantic responses while suppressing irrelevant ones. The AG unit harnesses the strengths of normalization and attention and combines them into a unified module to enhance feature representation.

- Successfully achieved exceptional performance of the EAN module across multiple disciplines, including Image Classification and Object Detection. Particularly in Object Detection, EAN outperformed the original ResNet50 and MobileNeXt by 5.5% and 5.4% in the MS COCO dataset, validating the superior accuracy and convergence of our EAN compared to state-of-the-art methods.

- Conclusion: In this paper, we explore the intrinsic relationship between two widely used techniques for enhancing models: feature normalization and attention. Further, we propose an Efficient Attention module guided by Normalization, named EAN. EAN incorporates an AG unit to derive attention weights using parameter-efficient normalization and guide the network to capture relevant semantic responses while suppressing irrelevant ones. The AG unit harnesses the strengths of normalization and attention and combines them into a unified module to enhance feature representation. EAN is also a plug-and-play module. Extensive experiments on multiple benchmark datasets validate the superior accuracy and convergence of our EAN compared to state-of-the-art methods.